The pace at which generative AI is advancing and changing is a site to behold. I have immersed myself in it as much as possible. Multiple daily newsletters, video updates and how-to articles. Reviewing research papers, watching trending AI GitHub repos, listening to thought leaders, and trying to understand where we are, and where we will be in 6 months. The regular news media is months behind, and often is just an instigator for divisions of big picture ideas.

“AI is taking jobs! AI is biased because humans have biases and supply the training data! AI will take over governments and those building the AIs will take over the world! AI will steal from the poor and give to the rich! Or they will steal from the rich and give to the poor!”

– big news media

I am interested in none of that. Hyperbole and panic are not my ideas of a good time. I am interested in outcomes from using current tools and strategies, and knowing what is coming soon, in order to prepare for it. In this blog series, I will dive deep into what you can do with generative AI based on your budget, time, and available resources. Many things can be done for cheap or free. More things can be done better or faster with a little bit of money, and other things that could never have been done before can now be done with a lot more money.

It is often the loudest voice in a room that gets the most mindshare of people that listen. This does not mean that it is the best, or the only option. I’m not saying it is bad either. The reason that every company and service sprinkles AI and ML into their products, is because right now there is a land grab. The first to market are the ones that get that initial influx of money and therefore have the ability to keep on iterating and keeping ahead.

That being said, no company or organization can rest on their laurels. That seed money is only valuable if it is put towards strategic short term initiatives that keeps advancing the state of the art, and adapting to market changes. Skunkwork projects need to be monetized in months, not years. When a whitepaper gets released in Arxiv.org the technology will be put into production somewhere within 24hrs. Then within a week or so, it will become a commercial endeavor. Within a month, there will be an open-source version of it. Within 2 months, there will be a dozen services built upon the open source version, all getting investments and doing their own land grab for the market share.

The basic things to know in point form.

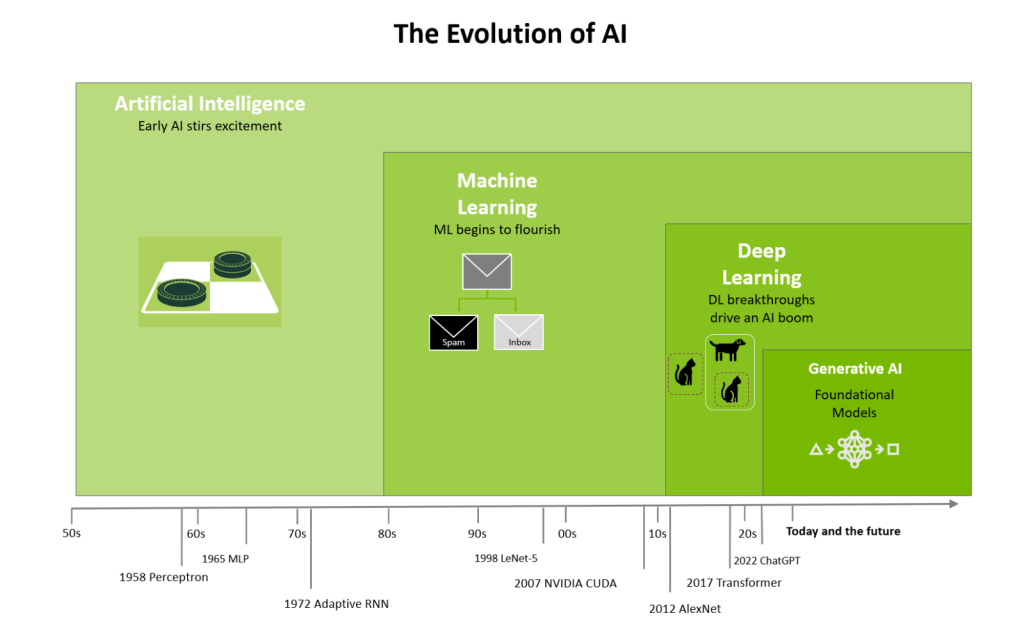

- Generative AI is a fairly new as far as AI goes.

- Early AI started in the 1950s/ 1960s

- Machine Learning started in the 1980s

- Deep Learning advanced substantially in the late 2010s

- Generative AI came to everyone in the early 2020s

2. The brains behind GenAI are “foundational models“

- This is like a compressed version of all human knowledge ever created.

- The larger the source for the training, the more “parameters” there are in a model. Some open source models have Billions, some closed source models have Trillions.

- It may take months with thousands of GPUs working in concert at full utilization, to create a foundational model.

- It costs millions of dollars to train a foundational model, making it impossible for many organizations to build one.

3. API calls are the life blood of GenAI

- Many companies that build foundational models, make money by providing access to them via API, and charging per execution.

- The costs are calculated by a combination of the type of model (capabilities and performance), the amount of data sent in and out of them per execution (called tokens),

- Some models are free to use, but they have to run somewhere. These are called open models. An example is LLAMA3, by Meta.

- Smaller open models can run on private machines, or servers. Very small ones can run on a CPU (like PHI-3 by Microsoft), but most will need a modern GPU.

- For people that don’t have GPUs, they can access APIs to an environment that has GPUs or LPUs, and run open models there for much cheaper and faster than API access to a closed model. GroqCloud or HuggingFace can provide this.

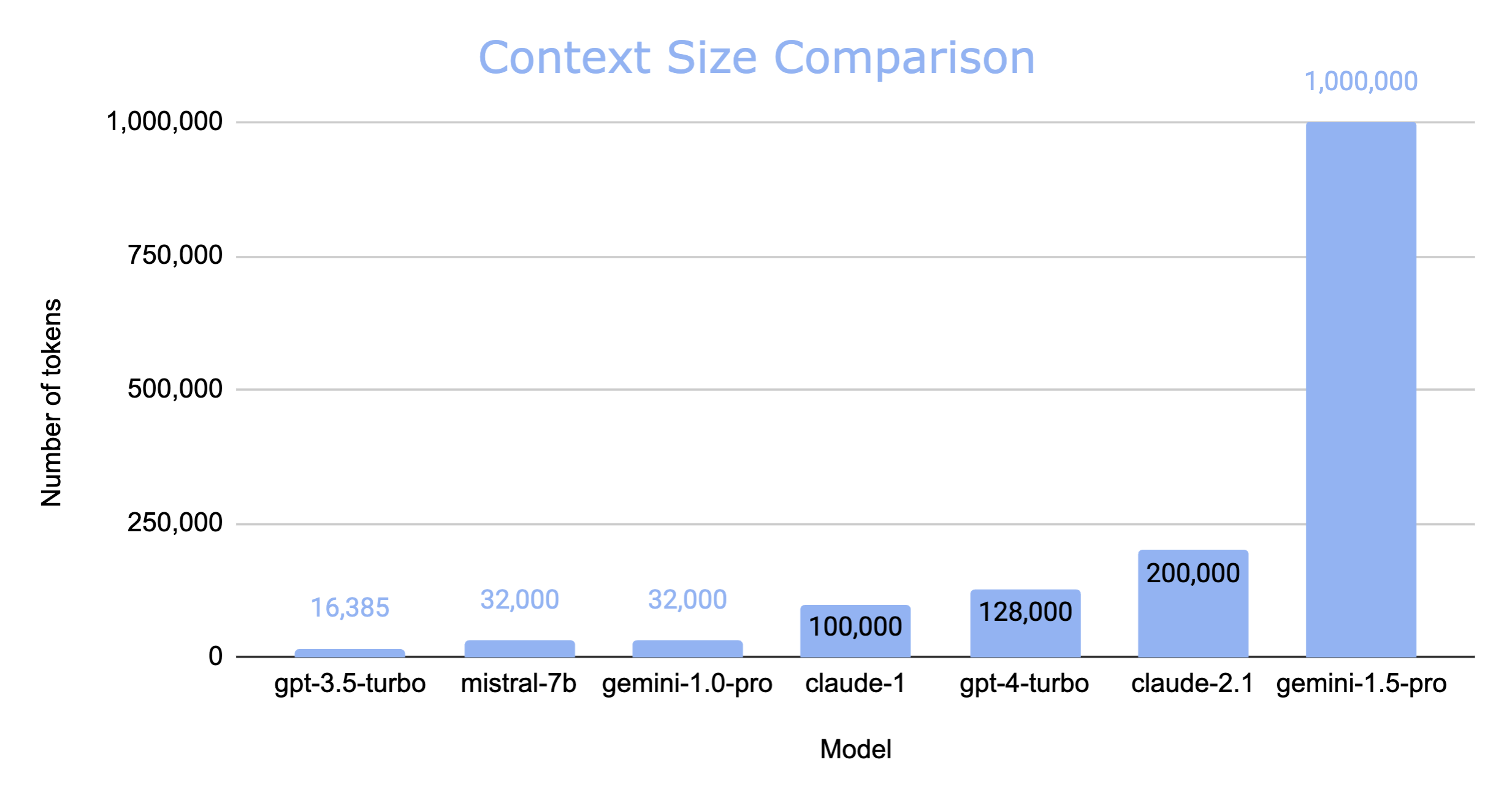

4. How much a model can chew and swallow at once is called a context window

- Context windows are sized by the number of tokens in them.

- A token resembles a syllable. This statement has 13 words and 15 tokens.

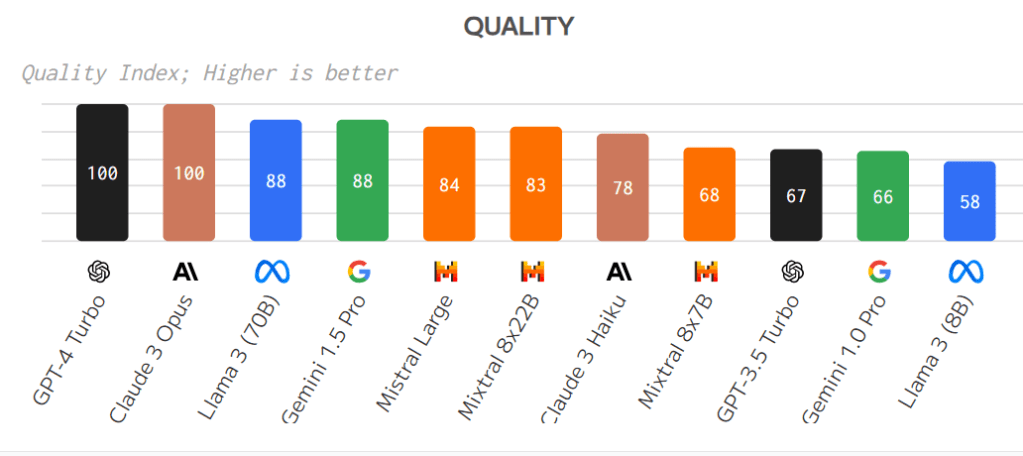

5. Some of the biggest players in foundational models are:

- OpenAI with GPT4 (heavily invested in by Microsoft)

- Anthropic with Claude (former OpenAI founders, split off due to ethical concerns)

- Mistral AI with Mixtral

- Meta with LLAMA

- Google with Gemini

- Cohere with Command R+

- Databricks with DBRX

Here is a comparison of the different models from Artificial Analysis

| Parameter | Best Performing Models | Subsequent Performers |

|---|---|---|

| Quality | Claude 3 Opus, GPT-4 Vision | GPT-4 Turbo, GPT-4, Llama 3 (70B) |

| Throughput (tokens/s) | Llama 3 (8B) (216 t/s), Gemma 7B (165 t/s) | Command-R, GPT-3.5 Turbo Instruct, Mixtral 8x7B |

| Latency (seconds) | Command-R (0.15s), Command-R+ (0.16s) | Mistral Small, Mistral Medium, Command Light |

| Blended Price ($/M tokens) | Llama 3 (8B) ($0.14), Gemma 7B ($0.15) | OpenChat 3.5, Llama 2 Chat (7B), Mistral 7B |

| Context Window Size | Gemini 1.5 Pro (1m), Claude 3 Opus (200k) | Claude 3 Sonnet, Claude 3 Haiku, Claude 2.1 |

6. It is very easy to build an app on top of GenAI.

- A plethora of SaaS solutions and services are built on-top of core LLM capabilities, with a pretty frontend.

- The time to build and scale the frontend is trivial as the majority of the logic can be done by the model on the backend, which is accessed via API.

- The code that is used to build the frontend can be generated by a code-focused GenAI, further trivializing the effort.

- A PoC of an app can be easily hosted on Hugging Face, Google Vertex AI, Azure AI Studio, or Amazon SageMaker

- PoCs will always work, but scaling is a different matter. From exponential costs in API usage, bandwidth, additional infrastructure to account for security, availability, performance, dev / test / QA, etc.

- Feel free to PoC, but plan to fail at scale

7. Chatbots are great, but…

- The true power of large language models is often missed by users that treat them as a replacement for google, Wikipedia, or a smart assistant like Alexa or Google Assistant.

- Some things that it can do very well are; data analysis, text and data manipulation, coding, understanding objectives and creating tasks, doing tasks (without training) that would normally require manual actions to be done by a person. It can also take on multiple personas and communicate with each other like a team.

- These capabilities are called agents, and they can be interactive or autonomous. They can use tools, and create other agents if needed to complete a task.

- Robotic Process automation, or RPA is used by many organizations to automate tasks that would normally be done by a person. But if you use an agent to create the task workflow on demand, then this is called IPA. Or intelligent Process Automation.

8. What is the difference between RPA and IPA?

- AI-Enhanced RPA: Intelligent Process Automation (IPA) merges AI with RPA, enabling systems to learn and improve from experience, expanding capabilities beyond simple task automation.

- Versatility in Automation: IPA adapts to changes and handles complex processes using AI technologies like natural language processing, making it superior to traditional RPA in flexibility and efficiency.

- Significant Cost Savings: IPA outperforms RPA in cost-effectiveness, with companies reporting savings exceeding $50 million and substantial increases in ROI and operational capacity.

- Efficiency in Human Resources: By automating complex tasks, IPA minimizes the need for manual human input, allowing employees to engage in higher-value work and improving overall productivity.

- Enhanced Business Processes: IPA transcends RPA limitations, fostering significant business transformation through more adaptive and efficient automation strategies.

Conclusion:

In summary here are the most important points of this article:

- Rapid Advancement of Generative AI: Generative AI is evolving swiftly, influenced by daily interactions with newsletters, videos, research papers, GitHub repos, and industry leaders.

- Focus on Practical Use: The blog series aims to explore practical applications of generative AI, considering different budgets and resource availability.

- Market Dynamics: There’s a competitive rush in AI and machine learning integration, with companies quickly commercializing new technologies.

- Generative AI Development: From early AI developments in the 1950s to the advent of foundational models in the early 2020s, these models require extensive resources for training.

- API Usage: Foundational models are accessible via API, which forms a critical revenue stream for companies. Costs are associated with model complexity and the amount of data processed.

- Context Windows and Tokenization: Describes the processing capability of models, emphasizing the importance of token-based data handling.

- Leading AI Models Comparison: Outlines top generative AI models and their capabilities, including those from OpenAI, Anthropic, and Google.

- Building on AI: It’s straightforward to develop applications using generative AI, with many services providing easy integration and scalability solutions.

- Difference Between RPA and IPA: Clarifies the enhancements IPA brings over traditional RPA, highlighting its adaptability and potential for cost savings.

What you should do:

Explore AI Tools:

- Research: Start by researching different generative AI platforms like OpenAI, Google AI, and Microsoft Azure AI to understand their offerings.

- Use Free Trials: Many platforms offer free trials or free-tier usage. Take advantage of these to test functionalities without financial commitment.

- Attend Workshops: Participate in workshops or webinars that focus on practical applications of AI tools. These can provide hands-on experience and insights from experts. For relevant discussions on AI tools and their impacts, listen to episodes from the Canadian Cybersecurity Podcast.

Stay Updated and Flexible:

- Subscribe to Newsletters: Sign up for AI-focused newsletters from credible sources like MIT Technology Review or The AI Report.

- Follow Key Influencers: Follow AI experts and thought leaders on platforms like LinkedIn or X to get real-time updates and insights.

- Join AI Communities: Engage with communities on platforms like Reddit, Stack Overflow, or specialized forums where discussions about the latest AI developments occur. Additionally, check out articles on DesigningRisk.com for in-depth analysis and tips on managing risks with generative AI.

Experiment and Learn:

- Build Prototypes: Run tests locally in containers, or use platforms like Hugging Face to build and test prototypes of AI applications.

- Learn Through Online Courses: Enroll in online courses on platforms like Coursera or Udemy that focus on AI development and applications.

- Feedback and Iterate: Regularly seek feedback on your AI prototypes and use it to make improvements, learning through the process of iteration. The Canadian Cybersecurity Podcast often features experts discussing the iterative process of technology development and deployment.

Participate in Our Community:

- Read and Comment: Regularly read the blog series and leave comments with your insights or questions to foster a dialogue.

- Share Experiences: Share your own experiences and challenges with using AI in your field. This can be done through guest blog posts or social media interactions linked to the blog and mentioned websites.

- Network at Events: Attend AI conferences and meetups mentioned in the blog to network with other professionals and discuss common challenges and solutions.

From the blog

Discover more from Designing Risk in IT Infrastructure

Subscribe to get the latest posts sent to your email.